Posts Tagged: misinformation

Twitter merges misinformation and spam teams following whistleblower claims

Twitter is making a major change to its organization after former security head Peiter "Mudge" Zatko accused the company of having lax security and bot problems. According to Reuters, Twitter is merging its health experience team, which is in charge of clamping down on misinformation and harmful content on the website, with its service team. The latter reviews profiles when they're reported and takes down spam accounts. Together, the combined group will be called Health Products and Services (HPS).

The group will be led by Ella Irwin, who joined the company in June and had previously worked for Amazon and Google. Reuters says Irwin sent a memo to staff members, telling them that HPS with "ruthlessly prioritize" its projects. "We need teams to focus on specific problems, working together as one team and no longer operating in silos," Irwin reportedly wrote.

In a statement sent to Reuters, a Twitter spokesperson said the reshuffling "reflects [the company's] continued commitment to prioritize, and focus [its] teams in pursuit of [its] goals." A source also told the news organization that the teams dealing with harmful and toxic content have had major staff departures recently. Merging these two teams may be the best way to ensure that all important roles are filled going forward.

This news comes on the heels of the revelation that Zatko filed a whistleblower complaint against his former employer. In it, he said Twitter has "extreme, egregious deficiencies" when it comes to security and that it prioritizes user growth over cleaning up spam. Shortly after The Washington Post reported on Zatko's complaint, which also raises concerns about national security, lawmakers from both sides of the aisle announced that they're looking into his claims.

In an email to employees, Twitter CEO Parag Agrawal defended the company and echoed its spokesperson's statement that Zatko's complaint is a "false narrative that is riddled with inconsistencies and inaccuracies." You can read the whole memo, obtained by Bloomberg, below:

"Team,

There are news reports outlining claims about Twitter’s privacy, security, and data protection practices that were made by Mudge Zatko, a former Twitter executive who was terminated in January 2022 for ineffective leadership and poor performance. We are reviewing the redacted claims that have been published, but what we’ve seen so far is a false narrative that is riddled with inconsistencies and inaccuracies, and presented without important context.

I know this is frustrating and confusing to read, given Mudge was accountable for many aspects of this work he is now inaccurately portraying more than six months after his termination. But none of this takes away from the important work you have done and continue to do to safeguard the privacy and security of our customers and their data. This year alone, we have meaningfully accelerated our progress through increased focus and incredible leadership from Lea Kissner, Damien Kieran, and Nick Caldwell. This work continues to be an important priority for us, and if you want to read more about our approach, you can find a summary here.

Given the spotlight on Twitter at the moment, we can assume that we will continue to see more headlines in the coming days – this will only make our work harder. I know that all of you take a lot of pride in the work we do together and in the values that guide us. We will pursue all paths to defend our integrity as a company and set the record straight.

See you all at #OneTeam tomorrow,

Parag"

Facebook labeled 180 million posts for election misinformation

Facebook just offered its first look at the scale of its fight against election misinformation. In the lead-up to the 2020 presidential election, Facebook slapped warning labels on more than 180 million posts that shared misinformation. And it remove…

Engadget

Twitter has labeled 300,000 tweets for election misinformation

A little more than a week after the election, Twitter is giving some additional insight into the effectiveness of its efforts to curb the spread of election misinformation. Between October 27, and November 11, the company labeled about 300,000 tweets…

Engadget

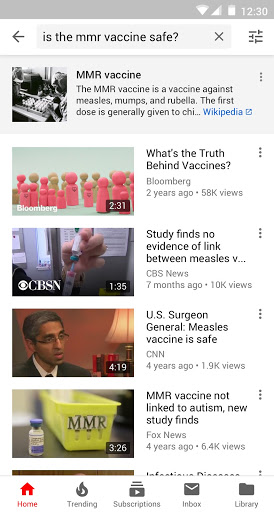

Google will ban coronavirus conspiracy ads to fight misinformation

Google is amping up its fight against coronavirus—related misinformation by banning ads that “[contradict] authoritative scientific consensus” about the pandemic. That means websites and apps can no longer make money from running advertisements promo…

Engadget RSS Feed

The North Face pulls Facebook ads over hate and misinformation policies

Criticism of Facebook’s approaches to hate speech and misinformation may hit the social network where it hurts the most: its finances. CNN reports that clothing brand The North Face has become the most recognizable company yet to join an advocacy gro…

Engadget RSS Feed

WHO joins TikTok to fight coronavirus misinformation

Engadget RSS Feed