Posts Tagged: we’re

Best Buy’s Geek Squad agents say they were hit by mass layoffs this week

Geek Squad agents have been flooding Reddit with images of their badges and posts about “going sleeper” after the company reportedly conducted mass layoffs this week. A former employee who spoke to 404 Media said they were sent an email notifying them to work from home on Wednesday and were then called individually to be told the news about their jobs. Some, per 404 Media’s sources and numerous Reddit posts, were longtime Geek Squad agents who had been with the company for more than 10 or even 20 years. Best Buy has not yet responded to Engadget’s request for comment.

There has been an outpouring of support for the laid off workers on the unofficial Geek Squad subreddit, where many have lamented the loss of jobs they’d dedicated much of their lives to and noted that things in the lead up had been heading in a concerning direction. Some commented that their hours had dwindled in recent months, with one former employee telling 404 Media it’s been “a struggle to get by.”

Best Buy conducted mass layoffs affecting employees at its retail stores just last spring, and as The Verge reports, CEO Corie Barry indicated during the company’s February earnings call that more layoffs were coming in 2024 as Best Buy shifts resources toward AI and other areas.

This article originally appeared on Engadget at https://www.engadget.com/best-buys-geek-squad-agents-say-they-were-hit-by-mass-layoffs-this-week-185720480.html?src=rss

Engadget is a web magazine with obsessive daily coverage of everything new in gadgets and consumer electronics

The top 7 bestselling phones of 2023 were all … you guessed it

One brand dominated the top 10 chart for bestselling smartphones in 2023, according to data released this week by research firm Counterpoint.

Digital Trends

Their children were shot, so they used AI to recreate their voices and call lawmakers

The parents of a teenager who was killed in Florida’s Parkland school shooting in 2018 have started a bold new project called The Shotline to lobby for stricter gun laws in the country. The Shotline uses AI to recreate the voices of children killed by gun violence and send recordings through automated calls to lawmakers, The Wall Street Journal reported.

The project launched on Wednesday, six years after a gunman killed 17 people and injured more than a dozen at a high school in Parkland, Florida. It features the voice of six children, some as young as ten, and young adults, who have lost their lives in incidents of gun violence across the US. Once you type in your zip code, The Shotline finds your local representative and lets you place an automated call from one of the six dead people in their own voice, urging for stronger gun control laws. “I’m back today because my parents used AI to recreate my voice to call you,” says the AI-generated voice of Joaquin Oliver, one of the teenagers killed in the Parkland shooting. “Other victims like me will be calling too.” At the time of publishing, more than 8,000 AI calls had been submitted to lawmakers through the website.

“This is a United States problem and we have not been able to fix it,” Oliver’s father Manuel, who started the project along with his wife Patricia, told the Journal. “If we need to use creepy stuff to fix it, welcome to the creepy.”

To recreate the voices, the Olivers used a voice cloning service from ElevenLabs, a two-year-old startup that recently raised $ 80 million in a round of funding led by Andreessen Horowitz. Using just a few minutes of vocal samples, the software is able to recreate voices in more than two dozen languages. The Olivers reportedly used their son’s social media posts for his voice samples. Parents and legal guardians of gun violence victims can fill up a form to submit their voices to The Shotline to be added its repository of AI-generated voices.

The project raises ethical questions about using AI to generate deepfakes of voices belonging to dead people. Last week, the Federal Communications Commission declared that robocalls made using AI-generated voices were illegal, a decision that came weeks after voters in New Hampshire received calls impersonating President Joe Biden telling them to not vote in their state’s primary. An analysis by security company called Pindrop revealed that Biden’s audio deepfake was created using software from ElevenLabs.

The company’s co-founder Mati Staniszewski told the Journal that ElevenLabs allows people to recreate the voices of dead relatives if they have the rights and permissions. But so far, it’s not clear whether parents of minors had the rights to their children’s likenesses.

This article originally appeared on Engadget at https://www.engadget.com/their-children-were-shot-so-they-used-ai-to-recreate-their-voices-and-call-lawmakers-003832488.html?src=rss

Engadget is a web magazine with obsessive daily coverage of everything new in gadgets and consumer electronics

Zelle may refund your money if you were scammed

Zelle recently made a huge change to its policy that would give victims of certain scams the chance to get their money back. The payment processor has confirmed to Engadget that it started reimbursing customers for impostor scams, such as those perpetrated by bad actors pretending to be banks, businesses and government agencies, as of June 30 this year. Its parent company Early Warning Services, LLC, said this “goes beyond legal requirements.”

As Reuters noted when it reported Zelle’s policy change, federal laws can only compel banks to reimburse customers if payments were made without their authorization, but not when they made the transfer themselves. The payment processor, which is run by seven US banks that include Bank of America, JP Morgan Chase and Wells Fargo, explained that it defines scams as instances wherein a customer made payment but didn’t get what they were promised. It had anti-fraud policy from the time it was launched in 2017, but it only started returning money to customers who were scammed, possibly due to increasing scrutiny and pressure from authorities.

“As the operator of Zelle, we continuously review and update our operating rules and technology practices to improve the consumer experience and address the dynamic nature of fraud and scams,” Early Warning Services, LLC, told Engadget. “As of June 30, 2023, our bank and credit union participants must reimburse consumers for qualifying imposter scams, like when a scammer impersonates a bank to trick a consumer into sending them money with Zelle. The change ensures consistency across our network and goes beyond legal requirements.

Zelle has driven down fraud and scam rates as a result of these prevention and mitigation efforts consistently from 2022 to 2023, with increasingly more than 99.9% of Zelle transactions are without any reported fraud or scams,” it added.

A series of stories published by The New York Times in 2022 put a spotlight on the growing number of scams and fraud schemes on Zelle. The publication had interviewed customers who were tricked into sending money to scammers but were denied reimbursement, because they had authorized the transactions. Senator Elizabeth Warren also conducted an investigation last year and found that “fraud and scams [jumped] more than 250 percent from over $ 90 million in 2020 to a pace exceeding $ 255 million in 2022.” In November 2022, The Times reported that the seven banks that own Zelle were gearing up for a policy change that will reimburse scam victims.

In Zelle’s “Report a Scam” information page, users can submit the scammer’s details, including what they were claiming to be, their name, website and their phone number. They also have to provide the payment ID for the transfer, the date it was made and a description of what the transaction was supposed to be about. Zelle said it will report the information provided to the recipient’s bank or credit union to help prevent others from falling victim to their schemes, but it’s unclear how Zelle determines whether a scam refund claim is legitimate or not.

“Zelle’s platform changes are long overdue,” Senator Warren told Reuters. “The CFPB (Consumer Financial Protection Bureau) is standing with consumers, and I urge the agency to keep the pressure on Zelle to protect consumers from bad actors.”

This article originally appeared on Engadget at https://www.engadget.com/zelle-may-refund-your-money-if-you-were-scammed-062826335.html?src=rss

Engadget is a web magazine with obsessive daily coverage of everything new in gadgets and consumer electronics

‘Overwatch 2’ director explains why hero missions were canceled

When Blizzard announced earlier this week that it had canceled Overwatch 2 hero missions, a central part of its player vs. environment (PvE) story mode, fans were none too pleased. So director Aaron Keller published a blog post today to ease the concerns and offer more transparency about the development team’s “incredibly difficult decision.”

Hero missions, revealed in 2019, were designed to provide a “deeply repayable” branch of the game based on RPG-like talent trees. Although progression would have been separate from the main game (to avoid giving hero mission players an unfair advantage), it was still part of the hype Blizzard used during the past four years of marketing the title. But the publisher ultimately found that the hero missions were pulling too many development resources away from the live game.

“When we launched Overwatch in 2016, we quickly started talking about what that next iteration could be,” Keller wrote. “Looking back at that moment, it’s now obvious that we weren’t as focused as we should have been on a game that was a runaway hit. Instead, we stayed focused on a plan that was years old.” That years-old plan refers to the development team’s influence from its work on Project Titan, Blizzard’s canceled MMORPG. The creators initially saw Overwatch as a vessel to reintegrate some of the ideas from that scrapped project.

“Work began on the PvE portion of the game and we steadily continued shifting more and more of the team to work on those features.” But, Keller says, “Scope grew. We were trying to do too many things at once and we lost focus. The team built some really great things, including hero talents, new enemy units and early versions of missions, but we were never able to bring together all of the elements needed to ship a polished, cohesive experience.”

Keller says the team’s ambition for hero missions was devouring resources at the expense of the core gameplay. “We had an exciting but gargantuan vision and we were continuously pulling resources away from the live game in an attempt to realize it,” said Keller. “I can’t help but look back on our original ambitions for Overwatch and feel like we used the slogan of ‘crawl, walk, run’ to continue to march forward with a strategy that just wasn’t working.”

The decision to abandon hero missions came down to prioritizing present quality over past promises. “We had announced something audacious,” Keller reflected. “Our players had high expectations for it, but we no longer felt like we could deliver it. We needed to make an incredibly difficult decision, one we knew would disappoint our players, the team, and everyone looking forward to Hero Missions. The Overwatch team understands this deeply — this represented years of work and emotional investment. They are wonderful, incredibly talented people and truly have a passion for our game and the work that they do.”

Overwatch 2’s story missions — minus the canned hero missions — are set to arrive in season six, scheduled for mid-August. PvE aspects include a single-player version with a leaderboard, in-game and out-of-game stories and “new types of co-op content we haven’t yet shared.” Before that content arrives, there’s still season five, set to launch in June.

This article originally appeared on Engadget at https://www.engadget.com/overwatch-2-director-explains-why-hero-missions-were-canceled-211629105.html?src=rss

Engadget is a web magazine with obsessive daily coverage of everything new in gadgets and consumer electronics

The Morning After: Biometric devices with military data were being sold on eBay

German researchers who purchased biometric capture devices on eBay found sensitive US military data stored on the machine’s memory cards. According to The New York Times, that included fingerprints, iris scans, even photographs, names and descriptions of the individuals, mostly from Iraq and Afghanistan. Many individuals worked with the US army and could be targeted if the devices fell into the wrong hands, according to the report. One device was purchased at a military auction, and the seller said they were unaware that it contained sensitive data. There was an easy solution too: The US military could have eliminated the risk by simply removing or destroying the memory cards before selling them.

– Mat Smith

The biggest stories you might have missed

-

Razzmatazz review: A delightful (and delightfully pink) drum machine

-

LG's 2023 soundbars offer Dolby Atmos and wireless TV connections

-

What we bought: The standing desk I chose after a lot of research

A third Blizzard studio pushes to unionize

The campaign involves all non-management workers.

Workers at Proletariat, a Boston-based studio Blizzard bought earlier this year, announced they recently filed for a union election with the National Labor Relations Board (NLRB). Proletariat is the third Activision Blizzard studio to announce a union drive in 2022. However, past campaigns at Raven Software and Blizzard Albany involved the quality assurance workers at those studios – the effort at Proletariat includes all non-management workers. The employees at Proletariat say they aim to preserve the studio’s “progressive, human-first” benefits, including its flexible paid time off policy and robust healthcare options. Additionally, they want to protect the studio from crunch – compulsory overtime during game development.

LG's new minimalistic appliances have upgradeable features and fewer controls

Upgradeable, to an extent.

LG is taking a more minimalist approach to its kitchen appliances in 2023, with less showy profiles, colors and, seemingly, controls. While we’re not getting a close-up look at all the dials and buttons yet, the appliances look restrained compared to previous years’ models. In the past, we’ve seen a washing machine whose flagship feature was an entire extra washing machine. There was also a dryer that had two doors. Just because. LG says it’s used recycled materials across multiple machine parts, adding that its latest appliances also require fewer total parts and less energy than typical kitchen appliances. This would dovetail with the company’s announcements at the start of the year, where LG said it would offer upgradability for its home appliances. So far, that’s included new filters for certain use cases and software upgrades with new washing programs for laundry machines.

US House of Representatives bans TikTok on its devices

Lawmakers and staff members who have TikTok on their phones would have to delete it.

TikTok is now banned on any device owned and managed by the US House of Representatives, according to Reuters. The House's Chief Administrative Officer (CAO) reportedly told all lawmakers and their staff in an email that they must delete the app from their devices, because it's considered "high risk due to a number of security issues." Further still, everyone detected to have the social networking application on their phones would be contacted to make sure it's deleted.

LG teases a smaller smartphone camera module with true optical telephoto zoom

It could lead to smaller smartphone camera bumps.

LG may not make smartphones anymore, but it's still building components for them. The company's LG Innotek arm just unveiled a periscope-style true optical zoom camera module with a 4-9 times telephoto range. Most smartphone cameras use hybrid zoom setups that combine certain zoom ranges (typically 2x, 3x, 10x, etc.) with a digital zoom to fill in between those (2.5x, 4.5x, etc.), leading to reduced detail. LG's "Optical Zoom Camera," however, contains a zoom actuator with movable components, like a mirrorless or DSLR camera. That would help retain full image quality through the entire zoom range, while potentially reducing the size and number of modules required. Could this mean the death of the camera bump?

The Apple Watch Series 8 might not get the redesign you were hoping for

Rumors have suggested that the Apple Watch Series 8 could get a major redesign with flat edges. Now, that may not be happening.

Wearables | Digital Trends

We’re going to the red planet! All the past, present, and future missions to Mars

A detailed list of all operational and planned missions to Mars, along with explanations of their objectives.

Emerging Tech | Digital Trends

FBI email servers were hacked to target a security researcher

The FBI appears to have been used as a pawn in a fight between hackers and security researchers. According to Bleeping Computer, the FBI has confirmed intruders compromised its email servers early today (November 13th) to send fake messages claiming recipients had fallen prone to data breaches. The emails tried to pin the non-existent attacks on Vinny Troia, the leader of dark web security firms NightLion and Shadowbyte.

The non-profit intelligence organization Spamhaus quickly shed light on the bogus messages. The attackers used legitimate FBI systems to conduct the attack, using email addresses scraped from a database for the American Registry for Internet Numbers (ARIN), among other sources. Over 100,000 addresses received the fake emails in at least two waves.

The FBI described the hack as an "ongoing situation" and didn't initially have more details to share. It asked email recipients to report messages like these to the bureau's Internet Crime Complaint Center or the Cybersecurity and Infrastructure Security Agency. Troia told Bleeping Computer he believed the perpetrators might be linked to "Pompomourin," a persona that has attacked the researcher in the past.

Feuds between hackers and the security community aren't new. In March, attackers exploiting Microsoft Exchange servers tried to implicate security journalist Brian Krebs using a rogue domain. However, it's rare that they use real domains from a government agency like the FBI as part of their campaign. While that may be more effective than usual (the FBI was swamped with calls from anxious IT administrators), it might also prompt a particularly swift response — law enforcement won't take kindly to being a victim.

These fake warning emails are apparently being sent to addresses scraped from ARIN database. They are causing a lot of disruption because the headers are real, they really are coming from FBI infrastructure. They have no name or contact information in the .sig. Please beware!

— Spamhaus (@spamhaus) November 13, 2021

We’re going to the red planet! All the past, present, and future missions to Mars

A detailed list of all operational and planned missions to Mars, along with explanations of their objectives.

Emerging Tech | Digital Trends

Nintendo ‘gigaleak’ reveals the classic games that never were

If you’ve ever wondered how Nintendo’s classic games evolved before they reached store shelves, you might have a good chance to find out. According to VGC, (via Eurogamer) a “gigaleak” of Nintendo art assets and source code from the mid-1990s has sur…

Engadget RSS Feed

Galaxy S20 Tactical Edition is announced, but it’s not the Galaxy Active you were hoping for

I don’t know who was asking for this, but Samsung has unveiled the Galaxy S20 Tactical Edition, or Galaxy S20 TE. This looks to be the descendent of the Galaxy Active phones, but cranked up to 11. It’s… strange. Galaxy S20 Tactical Edition This doesn’t look like a phone that you’ll be able to stroll […]

Come comment on this article: Galaxy S20 Tactical Edition is announced, but it’s not the Galaxy Active you were hoping for

Top 5 reasons why we’re excited for the Google Pixel 4a

With the Google Pixel 4a right around the corner it’s looking like one of the most anticipated phones of the year. The regular Pixel 4 was a bit disappointing, but last year’s Pixel 3a set the budget market on fire. The Pixel 4a seems like it can do the same for a few very good […]

Come comment on this article: Top 5 reasons why we’re excited for the Google Pixel 4a

Safer Internet Day: 5 ways we’re building a safer YouTube

Keeping you and your family safe online is a top priority at YouTube. Today on Safer Internet Day, we’re sharing some of the ways we work to keep YouTube safe, and how you can be more in control of your YouTube experience. From built-in protections to easy-to-use tools, we hope you’ll take advantage of these tips:

1. Learn more about the content available on YouTube

We work hard to maintain a safe community and have guidelines that explain what we allow and don’t allow on YouTube. Most of what we remove is first-detected by machines, which means we actually review and remove prohibited content before you ever see it. But no system is perfect, so we make sure if you see something that doesn’t belong on YouTube, you can flag it for us and we’ll quickly review it. If you want to know what happened to a video you flagged, just visit your reporting history to find out.

2. Learn more about what data we collect and how to update your privacy settings

Check out Your Data in YouTube to browse or delete your YouTube activity and learn more about how we use data. Your YouTube privacy settings include managing your search and watch history. If you prefer more private viewing, you can use Incognito mode on the YouTube mobile app or Chrome browser on your computer. Learn more.

You can also take the Privacy Checkup and we’ll walk you through key privacy settings step-by-step. For YouTube, you’ll be able to do things like easily pause your YouTube History, or automatically delete data that may be used for your recommendations.

3. Check in on your security settings and keep your passwords safe

Head over to Security Checkup for personalized recommendations to help protect your data and devices across Google, including YouTube. Here, you can manage which third-party apps have access to your account data and also take the Password Checkup, which tells you if any of your passwords are weak and how to change them. In addition, you can access Password Manager in your Google Account to help you remember and securely store strong passwords for all your online accounts.

4. Learn more about how ads work and control what ads you see on YouTube

We do not sell your personal information to anyone, and give you transparency, choice and control over how your information is used as a part of Google. If you’re curious about why you’re seeing an ad, you can click on Why this ad for more information. If you no longer find a specific ad relevant, you can choose to block that ad by using the Mute this ad control. And you can always control the kinds of ads you see, or turn off ads personalization any time in your Ad Settings.

5. Try the YouTube Kids app, built with parental controls

We recommend parents use YouTube Kids if they plan to allow kids under 13 to watch independently. YouTube Kids is a separate app with family-friendly videos and parental controls. We work hard to keep the videos on YouTube Kids suitable for kids and have recently reduced the number of channels on the app. The app also empowers parents to choose what’s the right experience for their kids and family, such as which content is available for their kids, how long they can use the app for and much more.

In addition to YouTube Kids, we also recently made changes to Made for Kids content on YouTube to better protect children’s privacy.

Finally if you have any questions or feedback, let us know! We are available 24/7 on @TeamYouTube, or you can always check out our YouTube Help Community to learn about the latest announcements. Responsibility is our number one priority, and together with you, we will continue our ongoing efforts to build a safer YouTube.

— The YouTube Team

Apple, Amazon were rare bright spots in a shrinking tablet market

Engadget RSS Feed

Galaxy Note 10+ photos have leaked, looks like they were captured on a potato

It’s about that time where people start snapping photos of the yet unreleased Galaxy Note 10, showing everything off to people on the internet well before Samsung even has an official launch date for the phone. And, like all good leaked photos, the photos are exceptionally blurry despite modern smartphones sometimes being able to compete […]

Come comment on this article: Galaxy Note 10+ photos have leaked, looks like they were captured on a potato

We’re finally seeing the first signs of dark mode coming to the Gmail app

Google has been building out dark mode updates to many of their major apps, but there are still a few that are missing the blacked out interface. Gmail is a big one, which is pretty strange considering just how popular Gmail is around the world and how important it is to Google’s business. It looks […]

Come comment on this article: We’re finally seeing the first signs of dark mode coming to the Gmail app

What we’re watching: ‘Lucifer,’ ‘Wu-Tang Clan: Of Mics and Men’

Engadget RSS Feed

Want to join the Talk Android team? We’re hiring!

Have an interest in Android and Google news? Want to use that interest and write for a team with other smartphone enthusiasts? Talk Android might just be the destination for you! We’re looking for part time writers to join our site and cover the daily news cycle, review gadgets, and offer your opinion one of the […]

Come comment on this article: Want to join the Talk Android team? We’re hiring!

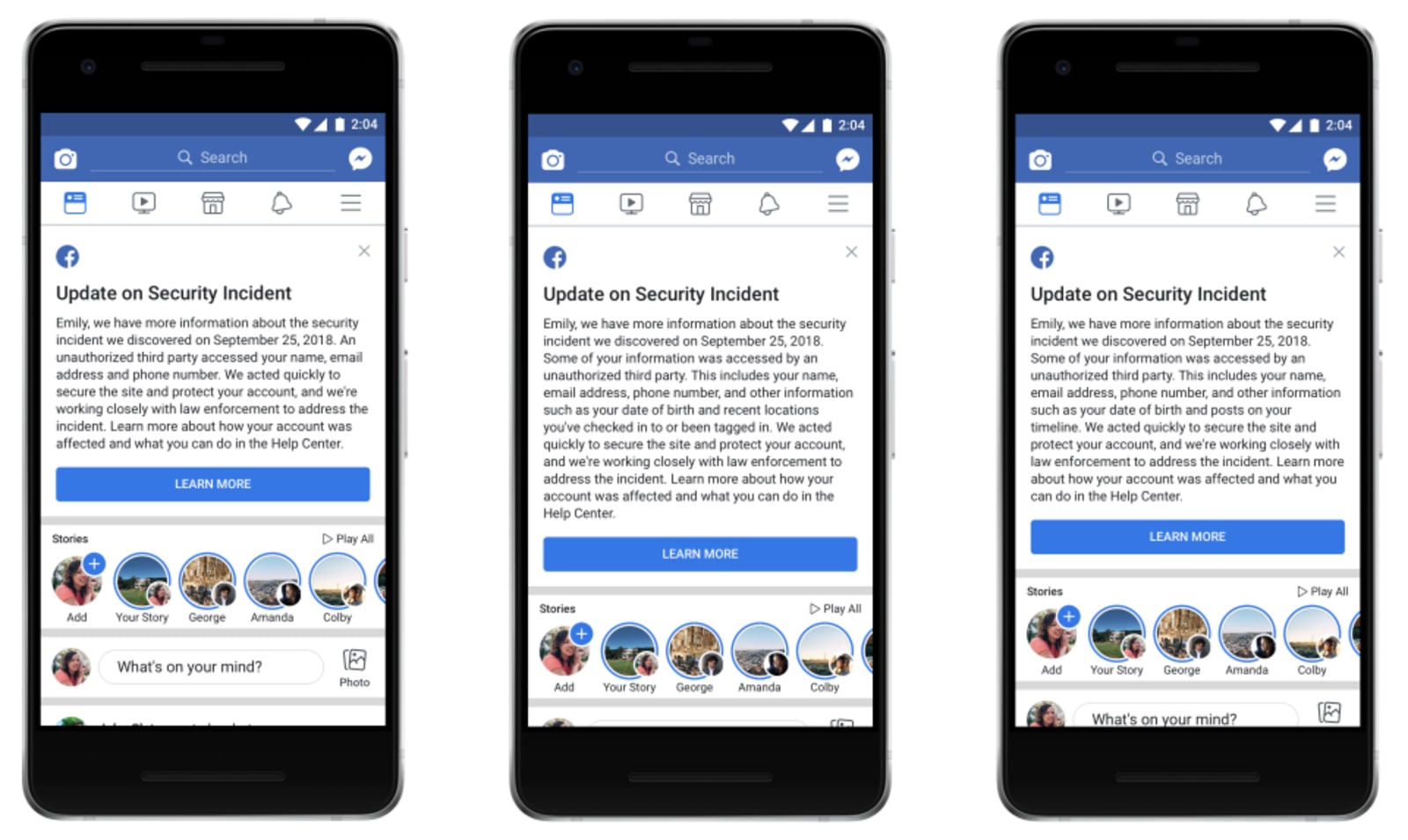

WSJ: Facebook believes spammers were behind its massive data breach

More than two weeks after Facebook revealed a massive data breach, we still don't know who was using the flaw in its site to access information on tens of millions of users. Now the Wall Street Journal reports, based on anonymous sources, that the co…

Engadget RSS Feed

Here’s how to see if you were affected by Facebook’s breach

Engadget RSS Feed

[TA Deals] We’re giving away a SNES Classic Edition through Talk Android Deals!

Did you want a SNES Classic but couldn’t find any in stock over the holidays? Don’t worry, we’ve got your back. We’re giving away one of Nintendo’s retro consoles through Talk Android Deals, and it’s incredibly simple to enter the contest. This console includes a ton of classic games, including hits like The Legend of […]

Come comment on this article: [TA Deals] We’re giving away a SNES Classic Edition through Talk Android Deals!

[Giveaway] We’re giving away Evo Max Tech21 cases for theGalaxy S9 and Galaxy S9+!

Need a case for your Galaxy S9? We’ve teamed up with Tech21 to help get you covered, literally. We’re giving away 10 black Evo Max cases from Tech21, and you’ll be able to pick either a case for either the Galaxy S9 or Galaxy S9+, whichever size you have. All you have to do is […]

Come comment on this article: [Giveaway] We’re giving away Evo Max Tech21 cases for theGalaxy S9 and Galaxy S9+!

More information, faster removals, more people – an update on what we’re doing to enforce YouTube’s Community Guidelines

In December we shared how we’re expanding our work to remove content that violates our policies. Today, we’re providing an update and giving you additional insight into our work, including the release of the first YouTube Community Guidelines Enforcement Report.

Providing More Information

We are taking an important first step by releasing a quarterly report on how we’re enforcing our Community Guidelines. This regular update will help show the progress we’re making in removing violative content from our platform. By the end of the year, we plan to refine our reporting systems and add additional data, including data on comments, speed of removal, and policy removal reasons.

We’re also introducing a Reporting History dashboard that each YouTube user can individually access to see the status of videos they’ve flagged to us for review against our Community Guidelines.

Machines Helping to Address Violative Content

Machines are allowing us to flag content for review at scale, helping us remove millions of violative videos before they are ever viewed. And our investment in machine learning to help speed up removals is paying off across high-risk, low-volume areas (like violent extremism) and in high-volume areas (like spam).

Highlights from the report — reflecting data from October – December 2017 — show:

- We removed over 8 million videos from YouTube during these months.1 The majority of these 8 million videos were mostly spam or people attempting to upload adult content – and represent a fraction of a percent of YouTube’s total views during this time period.2

- 6.7 million were first flagged for review by machines rather than humans

- Of those 6.7 million videos, 76 percent were removed before they received a single view.

For example, at the beginning of 2017, 8 percent of the videos flagged and removed for violent extremism were taken down with fewer than 10 views.3 We introduced machine learning flagging in June 2017. Now more than half of the videos we remove for violent extremism have fewer than 10 views.

The Value of People + Machines

Deploying machine learning actually means more people reviewing content, not fewer. Our systems rely on human review to assess whether content violates our policies. You can learn more about our flagging and human review process in this video:

Last year we committed to bringing the total number of people working to address violative content to 10,000 across Google by the end of 2018. At YouTube, we’ve staffed the majority of additional roles needed to reach our contribution to meeting that goal. We’ve also hired full-time specialists with expertise in violent extremism, counterterrorism, and human rights, and we’ve expanded regional expert teams.

We continue to invest in the network of over 150 academics, government partners, and NGOs who bring valuable expertise to our enforcement systems, like the International Center for the Study of Radicalization at King’s College London, Anti-Defamation League, and Family Online Safety Institute. This includes adding more child safety focused partners from around the globe, like Childline South Africa, ECPAT Indonesia, and South Korea’s Parents’ Union on Net.

We are committed to making sure that YouTube remains a vibrant community with strong systems to remove violative content and we look forward to providing you with more information on how those systems are performing and improving over time.

— The YouTube Team

1 This number does not include videos that were removed when an entire channel was removed. Most channel-level removals are due to spam violations and we believe that the percentage of violative content for spam is even higher.

2Not only do these 8 million videos represent a fraction of a percent of YouTube’s overall views, but that fraction of a percent has been steadily decreasing over the last five quarters.

3This excludes videos that were automatically matched as known violent extremist content at point of upload – which would all have zero views.

5 ways we’re toughening our approach to protect families on YouTube and YouTube Kids

In recent months, we’ve noticed a growing trend around content on YouTube that attempts to pass as family-friendly, but is clearly not. While some of these videos may be suitable for adults, others are completely unacceptable, so we are working to remove them from YouTube. Here’s what we’re doing:

- Tougher application of our Community Guidelines and faster enforcement through technology: We have always had strict policies against child endangerment, and we partner closely with regional authorities and experts to help us enforce these policies and report to law enforcement through NCMEC. In the last couple of weeks we expanded our enforcement guidelines around removing content featuring minors that may be endangering a child, even if that was not the uploader’s intent. In the last week we terminated over 50 channels and have removed thousands of videos under these guidelines, and we will continue to work quickly to remove more every day. We also implemented policies to age-restrict (only available to people over 18 and logged in) content with family entertainment characters but containing mature themes or adult humor. To help surface potentially violative content, we are applying machine learning technology and automated tools to quickly find and escalate for human review.

- Removing ads from inappropriate videos targeting families: Back in June, we posted an update to our advertiser-friendly guidelines making it clear that we will remove ads from any content depicting family entertainment characters engaged in violent, offensive, or otherwise inappropriate behavior, even if done for comedic or satirical purposes. Since June, we’ve removed ads from 3M videos under this policy and we’ve further strengthened the application of that policy to remove ads from another 500K violative videos.

- Blocking inappropriate comments on videos featuring minors: We have historically used a combination of automated systems and human flagging and review to remove inappropriate sexual or predatory comments on videos featuring minors. Comments of this nature are abhorrent and we work with NCMEC to report illegal behavior to law enforcement. Starting this week we will begin taking an even more aggressive stance by turning off all comments on videos of minors where we see these types of comments.

- Providing guidance for creators who make family-friendly content: We’ve created a platform for people to view family-friendly content — YouTube Kids. We want to help creators produce quality content for the YouTube Kids app, so in the coming weeks we will release a comprehensive guide on how creators can make enriching family content for the app.

- Engaging and learning from experts: While there is some content that clearly doesn’t belong on YouTube, there is other content that is more nuanced or challenging to make a clear decision on. For example, today, there are many cartoons in mainstream entertainment that are targeted towards adults, and feature characters doing things we wouldn’t necessarily want children to see. Those may be OK for YouTube.com, or if we require the viewer to be over 18, but not for someone younger. Similarly, an adult dressed as a popular family character could be questionable content for some audiences, but could also be meant for adults recorded at a comic book convention. To help us better understand how to treat this content, we will be growing the number of experts we work with, and doubling the number of Trusted Flaggers we partner with in this area.

Across the board we have scaled up resources to ensure that thousands of people are working around the clock to monitor, review and make the right decisions across our ads and content policies. These latest enforcement changes will take shape over the weeks and months ahead as we work to tackle this evolving challenge. We’re wholly committed to addressing these issues and will continue to invest the engineering and human resources needed to get it right. As a parent and as a leader in this organization, I’m determined that we do.

Johanna Wright, Vice President of Product Management at YouTube

Google’s Pixel 2 event is tomorrow and here’s what we’re expecting

Google seems to like being last when it comes to major product announcements, but maybe saving the best for last is the way to go. Starting tomorrow at 12pm Eastern Time, their Pixel 2 event will be action packed. Along with the highly anticipated new Pixel phones, Google will announce a host of new products, […]

Come comment on this article: Google’s Pixel 2 event is tomorrow and here’s what we’re expecting

We’re live from SXSW 2017!

The past few weeks have been intense for the tech world, what with MWC and GDC taking place over the past few weeks. Now it's turn for SXSW 2017. We're on the ground in Austin, Texas to check out what the festival has to offer with its interactive, m…

The past few weeks have been intense for the tech world, what with MWC and GDC taking place over the past few weeks. Now it's turn for SXSW 2017. We're on the ground in Austin, Texas to check out what the festival has to offer with its interactive, m…

Engadget RSS Feed

We’re liveblogging Apple’s ‘Hello Again’ MacBook launch!

Hello again, indeed! If it feels like we were just doing this, it's because… we were. Apple held an event last month to unveil the iPhone 7 and Apple Watch Series 2. There was much fanfare and we had quite a bit to say about it all. Now, just a few…

Hello again, indeed! If it feels like we were just doing this, it's because… we were. Apple held an event last month to unveil the iPhone 7 and Apple Watch Series 2. There was much fanfare and we had quite a bit to say about it all. Now, just a few…

Engadget RSS Feed

These were our favorite games, hardware and toys from E3 2016

Another year, another massive, exciting E3 showcase. The biggest names in the video game industry brought out their newest games and hardware, including two console announcements (and controllers) from Xbox and a ton of fresh games from PlayStation w…

Another year, another massive, exciting E3 showcase. The biggest names in the video game industry brought out their newest games and hardware, including two console announcements (and controllers) from Xbox and a ton of fresh games from PlayStation w…

Engadget RSS Feed

YouTube’s first live 360-degree videos were little more than tech demos

Last week, YouTube started supporting live 360-degree video streams in a bid for more-immersive video content. Though users have been able to upload and watch 360-degree video for over a year, it's only now that Google is introducing the option to be…

Last week, YouTube started supporting live 360-degree video streams in a bid for more-immersive video content. Though users have been able to upload and watch 360-degree video for over a year, it's only now that Google is introducing the option to be…

Engadget RSS Feed

The best tech toys for kids will make you wish you were 10 again

Are you hunting for the perfect tech toy or gadget gift for your child? It can be tricky to find great tech for kids. There’s a lot to choose from, but what will go the distance? And what will end up at the bottom of a toy box?

The post The best tech toys for kids will make you wish you were 10 again appeared first on Digital Trends.

After Math: That’s it, we’re calling security

It's been a heck of a week. With the world still reeling from the Paris attacks, more people than ever are concerned with their personal security. That's why we're featuring five of this week's best posts about stuff that keeps us safe — and one a…

It's been a heck of a week. With the world still reeling from the Paris attacks, more people than ever are concerned with their personal security. That's why we're featuring five of this week's best posts about stuff that keeps us safe — and one a…

Engadget RSS Feed

In this installment of our audio IRL, managing editor Terrence O'Brien sings… er, types the praises of a band and a genre that isn't for everyone. Senior news editor Billy Steele gets nostalgic for his glory days as one of his favorite bands is bac…

In this installment of our audio IRL, managing editor Terrence O'Brien sings… er, types the praises of a band and a genre that isn't for everyone. Senior news editor Billy Steele gets nostalgic for his glory days as one of his favorite bands is bac… This week's IRL tale has nothing to do with new year's resolutions. Thankfully. Instead, Senior Editor Dan Cooper tries to replace his decent (but broken) baby monitor, and finds that cheaper models no longer cut it.

This week's IRL tale has nothing to do with new year's resolutions. Thankfully. Instead, Senior Editor Dan Cooper tries to replace his decent (but broken) baby monitor, and finds that cheaper models no longer cut it.

This month, Associate Editor Swapna Krishna is singing the praises of Dyson's advanced but pricey hair dryer. Compared with her old model, it's like night and day.

This month, Associate Editor Swapna Krishna is singing the praises of Dyson's advanced but pricey hair dryer. Compared with her old model, it's like night and day.

This month, we're making the most of our devices, whether that's by testing mobile photo-editing apps, trying out an iPad keyboard that matches its surroundings, or simply just laying down a little too much cash for a pretty-looking Pixel 2 phone cas…

This month, we're making the most of our devices, whether that's by testing mobile photo-editing apps, trying out an iPad keyboard that matches its surroundings, or simply just laying down a little too much cash for a pretty-looking Pixel 2 phone cas…

Faster removals and tackling comments — an update on what we’re doing to enforce YouTube’s Community Guidelines

We are committed to tackling the challenge of quickly removing content that violates our Community Guidelines and reporting on our progress. That’s why in April we launched a quarterly YouTube Community Guidelines Enforcement Report. As part of this ongoing commitment to transparency, today we’re expanding the report to include additional data like channel removals, the number of comments removed, and the policy reason why a video or channel was removed.

Focus on removing violative content before it is viewed

We previously shared how technology is helping our human review teams remove content with speed and volume that could not be achieved with people alone. Finding all violative content on YouTube is an immense challenge, but we see this as one of our core responsibilities and are focused on continuously working towards removing this content before it is widely viewed.

When we detect a video that violates our Guidelines, we remove the video and apply a strike to the channel. We terminate entire channels if they are dedicated to posting content prohibited by our Community Guidelines or contain a single egregious violation, like child sexual exploitation. The vast majority of attempted abuse comes from bad actors trying to upload spam or adult content: over 90% of the channels and over 80% of the videos that we removed in September 2018 were removed for violating our policies on spam or adult content.

Looking specifically at the most egregious, but low-volume areas, like violent extremism and child safety, our significant investment in fighting this type of content is having an impact: Well over 90% of the videos uploaded in September 2018 and removed for Violent Extremism or Child Safety had fewer than 10 views.

Each quarter we may see these numbers fluctuate, especially when our teams tighten our policies or enforcement on a certain category to remove more content. For example, over the last year we’ve strengthened our child safety enforcement, regularly consulting with experts to make sure our policies capture a broad range of content that may be harmful to children, including things like minors fighting or engaging in potentially dangerous dares. Accordingly, we saw that 10.2% of video removals were for child safety, while Child Sexual Abuse Material (CSAM) represents a fraction of a percent of the content we remove.

Making comments safer

As with videos, we use a combination of smart detection technology and human reviewers to flag, review, and remove spam, hate speech, and other abuse in comments.

We’ve also built tools that allow creators to moderate comments on their videos. For example, creators can choose to hold all comments for review, or to automatically hold comments that have links or may contain offensive content. Over one million creators now use these tools to moderate their channel’s comments.1

We’ve also been increasing our enforcement against violative comments:

We are committed to making sure that YouTube remains a vibrant community, where creativity flourishes, independent creators make their living, and people connect worldwide over shared passions and interests. That means we will be unwavering in our fight against bad actors on our platform and our efforts to remove egregious content before it is viewed. We know there is more work to do and we are continuing to invest in people and technology to remove violative content quickly. We look forward to providing you with more updates.

YouTube Team

1 Creator comment removals on their own channels are not included in our reporting as they are based on opt-in creator tools and not a review by our teams to determine a Community Guidelines violation.

YouTube Blog